Surprisingly, most of the challenges faced by analytics engineers aren’t super technical. They don’t involve rearchitecting your data warehouse, learning a new language, or even encoding business processes into data models. The largest challenges involve working with people, creating the right processes, using the right tools, and gaining a clear understanding of the data.

These are such big challenges because they require many teams to come together and work towards a shared goal. As an analytics engineer, I’ve found these problems can’t be solved on an individual or even a data team level. They need to be worked towards as an entire company. While changing processes within an organization can be a challenge, it’s one we all face and make strides toward overcoming every day.

In my 3+ years as an analytics engineer, here are some recurring challenges I’ve faced. Hopefully, you can learn from my personal experience and avoid the mistakes I see (and have made) way too often.

1. “Our metrics are trapped in a maze of BI tools”

When a metric is trapped in a BI tool, you have no way to uphold a shared metric definition. The definition of the same metric calculated in different reports and dashboards begins to drift over time. One calculation is updated to fit the new shared definition while the other lags behind. Soon, you have no way to know which metric is the source of truth!

When there’s no source of truth with your data, stakeholders lose trust in models, dashboards, and reports. If metrics conflict with one another, their confidence in the data you provide them with plummets. They’d rather find their own solutions, leading your organization away from becoming truly data-driven. Not to mention, without a source of truth, you can’t adopt advanced AI-driven analytics. This will only lead to more data quality issues within the organization.

I ran into this issue a few months ago when updating a referrals model to point to new data sources. After finishing the model, I repointed metric calculations for the number of recommendations and referrals found in dbt™ to this new data model. However, I didn’t realize these metrics were also used in our BI tool. A few weeks later, we discovered that some metrics were pointing to the old model and others to the new model, creating a wave of confusion among stakeholders.

Instead of using our dashboards, they created their own scrappy solution (which also happened to be incorrect). Instead of being defined within BI tools, metric calculations should be moved to a shared transformation tool such as dbt. Here, they can be updated in one place and then referenced downstream in multiple dashboards or analyses.

In my first analytics engineering role, once I saw how slow the data models were to run and the dashboards to update, I made it my mission to move our most important metrics to a scalable tool like dbt. In situations like this, it’s usually best to start from scratch and facilitate conversations on how metrics should be calculated. This requires gathering the right stakeholders and discussing a shared metric definition. While repeating work that was once done is painful, it’s necessary when models aren’t built to be sustainable.

2. “It’s impossible to govern business logic”

When code is trapped in your BI tool, there is no visibility into what business logic exists, how it’s calculated, and how often it’s used. Analytics engineers can’t see what data analysts write, disconnecting them from the logic directly tied to the business.

Too often, I find myself writing something and spending a lot of time on logic in a data model only to learn that this has already been coded within a dashboard or report. This is not only a waste of my time, but disadvantageous to the analysts that spent so much time on the original code!

When logic is ungoverned within BI tools, there’s a much higher possibility for things to go wrong. Because no centralized logic exists, stakeholders and analysts can easily reference outdated logic without knowing. Many BI tools lack the ability to search for metric calculations or track down dependencies, making it even easier to break the code in place.

Last year, I realized that I needed a dimensional model for products. I worked closely with engineers to understand certain nuances in the data, piecing together the tables and what the fields in them meant for the business. A few weeks in, I learned that a version of this code already existed within our BI tool.

Unfortunately, I had no idea. While the work wasn’t a complete waste, it could have been sped up by talking with the data analyst who originally built out this model in our BI tool.

3. “High data warehouse compute costs, slow dashboard load time”

When data teams consist of mostly data analysts and no analytics engineers, they often begin writing their data models directly in BI tools like Tableau and Looker. While this starts as an easy solution to data transformation, it quickly snowballs into unscalable data models trapped in your BI tool (as describe in the first challenge).

Data rarely ever follows a clear pattern, which means data models grow complicated fast. As complexity grows, your BI tool can no longer handle transformation in a timely manner, leading to slow dashboard load time and high data warehouse compute costs.

When I first started as an analytics engineer, I discovered data models built on top of other complex data models, trapped within the BI tool. They were impossible to debug because the code kept building off of the model it referenced, clearly not written to scale with the business. The models were impossible to untangle and optimize. Some would take over 24 hours to run, preventing stakeholders’ dashboards from being updated at the expected timeframe.

Naivety led me to try to understand these models and re-write them, but eventually, I realized that it’s often better to start from scratch to fix technical issues like this. To avoid rewriting your data models, you need to build them to be scalable in the first place. This means writing them outside of the BI tool, in a place where you can version control your code and follow best practices. While this takes more time upfront, it saves you a world of headaches in the future.

4. “We’re drowning in analytics engineering bottlenecks”

As analytics engineers, there is never enough time to build the data models needed to support the questions asked by stakeholders. Growth, product, and marketing teams are always asking for more data. When a revenue data model becomes the top priority, questions that only a potential attribution model can answer become the business’s focus. Week after week, teams will ask for updates on the new dimension they want to analyze for growth, or the latest driver of revenue.

The data team is forced to keep tickets for new dashboards and reports in the backlog because they don’t yet have the foundational datasets in place to power them. The stress of shooting requests down left and right starts to weigh on you, causing you to question if you are working on the right projects. Unfortunately, if you want to give stakeholders high-quality data, you need to say no and balance tradeoffs.

If you don’t have the data models to support the questions being asked, you most likely should not try answering them. This is because the data needed to answer them is highly complex and takes weeks, or even months, to be modeled. High-quality data models take time and lots of thought to build.

As analytics engineers, it’s important to notice the questions you are asked again and again by stakeholders. Noticing this pattern will help you decide which data models will be the best use of your time to build.

In the last few months, my data team received lots of questions on revenue within the business, a data model that was previously built but was quite the black box. Because of all the uncertainty caused by this model, we decided to take rebuilding the revenue model as a large project. This way, we can fully understand the data and its edge cases, giving stakeholders answers that we are confident in.

While this is a project that’s months in the making, it’s important to communicate to the business the value of doing things slow but scalable. Speed is an important tradeoff for high-quality, scalable data models.

5. “Analyst ↔ Analytics Engineer: the back-and-forth”

Most companies lack shared terminology and metric definitions across the business. You’ll often find analysts talking to business stakeholders and calculating a metric one way while analytics engineers are talking with engineers and calculating a metric another way.

This leads to tedious back-and-forth conversations on Slack between many different people, with everyone trying to get their specific questions answered. The team silos and time zone differences make it painful to try to capture everyone’s ideas in one shared metric, by the business’s deadline.

Because these conversations happen in so many different places across the business, various groups of people have different ideas of what the same metric means. Over time this leads to conflicting numbers for the same metric, causing distrust in the data team.

“Active user” is a classic metric that causes so much confusion among business teams. Marketing may see this as someone who has visited the company’s website organically in the last week while growth views it as someone who has clicked into the product five times in the last month. If one team measures this one way and the other an opposite way, despite using the same language, both teams will end up very confused.

Analysts and analytics engineers must facilitate multiple conversations with the right people so that together they can decide what a metric means. This requires holding one another accountable for following shared definitions and consulting one another whenever stakeholders request a new metric.

If you had to sum up these 5 challenges in one: Trust your data models

As analysts embrace AI and self-serve BI tools, maintaining a consistent data model is crucial. Well-governed data models build trust in data products. However, business logic is dynamic and constantly changing.

Large organizations struggle to balance analysts’ autonomy in creating new terms with central governance, which often leads to business logic chaos and undermines trust in the organization’s data.

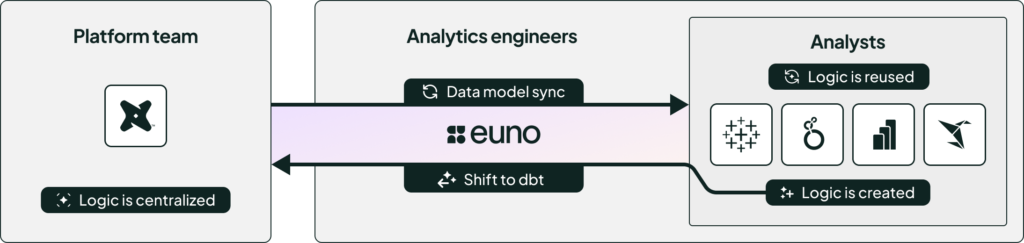

While analytics engineers are guaranteed to face these challenges every week, we now have tools like Euno to help ease the burden and battle burnout. Euno helps analytics engineers balance self-serve analytics and data model governance, without slowing down time-to-insight. Data analysts can focus on business questions, while Euno handles the necessary data model changes, in your transformation and metrics layers.

The main advantage of Euno is how it leverages the power of dbt. Euno integrates with dbt and extends its governance into the BI layer. In other words, Euno helps analytics engineers move metrics out of the BI layer so they can exist in a central location where they can be updated as they change. It allows you to govern business logic, giving you complete visibility to where metric calculations are being used and how often they’re being used.

How does Euno help your data team?

Build a source of truth for metrics in dbt

Euno allows you to build a semantic layer in dbt with minimal lift. It automatically codifies selected logic from measures created in your BI tools into dbt/MetricFlow. Euno keeps this central metrics layer synchronized with Looker, Tableau, PowerBI, and Sigma, and provides access to data scientists through an SDK.

Cut analytics engineering bottlenecks

Free up analytics engineers from the backlog of grunt work. With over 50% of their time spent keeping dbt and the BI layer in sync, Euno automates repetitive and redundant data modeling tasks, so your team can focus on more strategic work.

Centrally govern your entire data model

Achieve complete end-to-end visibility into your data model lineage with all your dbt and BI logic mapped out. Understand calculations, dependencies, and utilization patterns and use Euno’s advanced governance KPIs and remediation guidelines to prioritize your data governance activities.

Boost performance through rapid materialization

Fully automate the process to generate pre-aggregate models based on dbt metrics, optimizing slow query times & reducing compute costs. Create summarized data tables, select the exact columns to group by, choose the appropriate join paths, and add filters for more refined data aggregation.

Conclusion

Investments in the right tools and processes will allow you to move fast while also creating a sustainable, scalable data environment. Analytics engineers can’t run from these challenges, but they can find smarter ways to solve them. Don’t spend hours picking through data models trapped in BI layers like I did. Use a tool like Euno to streamline your process, and more importantly, build a dynamic and reliable foundation for AI-driven analytics–making you AI-ready.